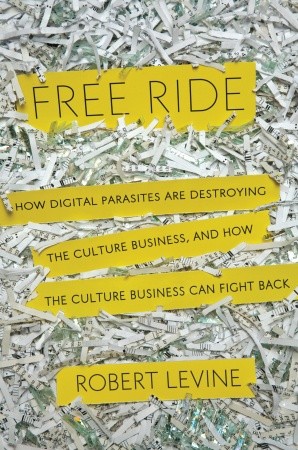

further comment posted on Maria Popova’s post on Brainpickings.org: “Free Ride: Digital Parasites and the Fight for the Business of Culture”:

“Maria, I agree with you fully, there are dubious practices out there regarding online content, which may endanger the creation and curation of the culture that we want. Also, there are interesting new practices and protocols emerging for curating, etc., and I would like to contribute to fostering and protecting such wonderful things as Brainpickings/Brainpicker.

However, I think that distinguishing good from parasitic/unethical/illegal is quite subtle and complex, as shown by the jurisprudence over aggregation, and the Romanesko case. It is to help work out these issues that I offer observations about your vs. Huffington Post etc.’s practices.

As a side note, personally I am especially interested in issues of algorithmic curation — recommender systems, design for serendipity, applying cognitive science to reading environments, etc. In many cases, such systems aggregate and operate upon creative or curatorial work done by many people, so they may raise tricky questions of who and how you’d credit for the discovery.

Eli Pariser (of “The Filter Bubble”) recently at MassHumanities 8 made an argument that we need some things to be human-curated because machines can’t do the type of serendipity and discovery we need. I’m not convinced one should essentialize and separate these two dimensions: humans can be mechanical — witness most newspapers and newspaper articles — and algorithms can deliver serendipity and surprise. What we really have, now and increasingly, is cyborg curation, i.e. blended human and algorithmic work. Consider a search engine: algorithmic, but based on large-scale harvesting of human curatorial intentionality in the form of links and content. Tools like Google Reader and Twitter dramatically expand my ability to receive human-curated and created work from hundreds of diverse sources, efficiently and egalitarianly.

notice for 1968 presentation by Douglas Engelbart on "augmenting human intellect". Referred to as the "mother of all demos"

The real question is how to build systems that serve our needs (including the incenting of creation and curation, not just the end-user experience). I think one good way to frame the goal is that we are “augmenting human intellect”, as Engelbart put it in 1962.

As I see it, one reason there will be a large algorithmic component to future “curation” is that, from an end-user’s point of view, relevance and serendipity and value are individual, thus very much enhanceable by personalization. Economically, human curators can’t be doing personalized curation for every end user, so machines will play a big role there.

. . . .

Anyway, back to the issue of ethical/legal distinctions between curation and parasitism. I read the helpful paper and article by Kimberly Isbell about aggregation legal issues and best practices. Applying this to your discussion of Free Ride and parasitism, correct me if I’m wrong but it seems you focus on two main means of distinction:

1) crediting

2) commercial use

So to take 1), crediting:

> without crediting sources of discovery…it’s anywhere between

> unethical and downright illegal

I just observe that the overwhelming norm, across media, is that people don’t credit their immediate discovery source. Some books may have thorough acknowledgements, academic work may cite works, workshops or conversations which led to ideas, but these seem to be exceptions. If I look at most articles in magazines online or off, or blogs, etc., I don’t think it’s common for each element’s source to be credited. On Twitter, which is perhaps the emerging super-discovery platform, there’s barely room to credit, and the difficulties are suggested by the fact that of the 85 most recent @brainpicker tweets I looked at yesterday at noon, I counted only 5 with in-tweet credit (RT, via, HT, etc.).

I think there are many factors that incline people to not identify discovery sources, not just lack of ethics — it may be considered irrelevant, edited out for space, thought to be undesirably revealing of sources or journalistic methods, the discovery may have been algorithmic and not clearly creditable, etc.

Legally, I don’t see strong precedent for requiring disclosure of sources: as far as I can tell, the law in this area, such as around copyright and hot news, concerns reuse of material, and doesn’t address sources of discovery. Topics and facts are not copyrightable, and practically, it may be very difficult to prove where a media source discovered any given item. You suggest that HuffPo’s discovery of the AAS item from a source other than you is “statistically” unlikely, but that sounds like it would unfortunately be a difficult case to make, legally or otherwise. Would I want arbitrary sources out there judging my blog posts or tweets as unethical or illegal based on statistical likelihood of my topic coming from them? That sounds like exerting ownership of ideas, which has been explicitly rejected by our courts.

As far as how credit may be given, I’d suggest that explicit credit in the text of the piece is much better than implicit credit in the form of a link. What’s on the other end of a link may disappear, change, be offline for a particular reader at any particular moment, or effectively not be discovered/admitted as evidence in any legal test. We also can predict that users may frequently read without following out-links; so, for example, in the case of the Brainpicking article that linked to AAS vs. the Huffpo article that explicitly named the AAS exhibit, I would guess that HuffPo article readers were far more, say 100x as likely to learn about the exhibit. I know you do usually name creators/sources in Brainpickings, of course.

point 2), commercial use:

I infer that you make a distinction between non-commercial Brainpicker/Brainpickings and say, commercial HuffPo because of the “commercial” test in the fair use exclusion to copyright. From working for some years at a not-for-profit that had commercial operations, I’ve learned that the delineation of “commercial use” can be quite complex. For example:

> The Twitter example I find irrelevant – the curation I

> do there isn’t benefitting me in any way

>

> Twitter is not “monetizable” in the way HuffPo..

There are many ways that Twitter posting is both directly and indirectly monetizable. For example, you can do what a number of feeds already do, and have “sponsored posts”, disclosed or not. There are many marketers who pay people for favorable tweeting — along with favorable reviews, blog posts, and comments. Whether you do this or not, it means Twitter is not prima facie non-monetizable. Twitter links can earn associate fees — as you do with the many Brainpicker links leading to Amazon links, which have a Brainpicker associates tag that lets you earn commission on sales. Your twitter links also often lead to Brainpickings, on which you solicit donations.

More broadly, having a large following on Twitter is a clear asset in many realms, such as applying for any media- or social-networking related job. You noted that “followers…[are] a different kind of currency.” If you get a social-media fellowship at MIT or Harvard’s Nieman Foundation, or get writing/curating work at the Atlantic, would you really say that having 100k + followers had nothing to do with it?

My experience is that unless you are a registered not-for-profit organization, and your activity falls clearly within the not-for-profit mission of that organization, then claiming non-commercial use is not clearcut. It doesn’t necessarily matter if you are, de facto, not making money, it matters what your legal status is and whether the activity is in keeping with that status grant. Practically, individuals or any party not affirmatively classified as not-for-profit, can often encounter difficulties claiming fair use exemption this way.

You may point out that you are providing a “public service,” and give your curation for free. But any commercial Web site might also say it performs a public service by offering freely accessible content. Ad monetization can and frequently is avoided by readers’ use of, say, AdBlocker or, like you, Google Reader, which sites like HuffPo don’t prevent me from doing.

Anyway, I thank you again for the cabinet of wonders that is Brainpickings/picker, and hope that my ruminations may be of some help. I’d like to keep in conversation as I work on my own discovery-tool / curation projects, and perhaps publish some findings this year.

comment submitted to TechCrunch article “True Or False? Automatic Fact-Checking Coming To The Web – Complications Follow” by Devin Coldewey, 11/28/2011.

comment submitted to TechCrunch article “True Or False? Automatic Fact-Checking Coming To The Web – Complications Follow” by Devin Coldewey, 11/28/2011.